Mind the Gap: Mutil-Level

Unsupervised Domain Adaptation for Cross-scene

Hyperspectral Image Classification

Mingshuo Cai, Bobo Xi, Member, IEEE, Jiaojiao Li, Member, IEEE,

Shou Feng, Member, IEEE, Yunsong Li, Member, IEEE,

Zan Li, Senior Member, IEEE, and Jocelyn Chanussot, Fellow, IEEE

⭐ Project Home »

[PDF ] [Code ]

Abstract

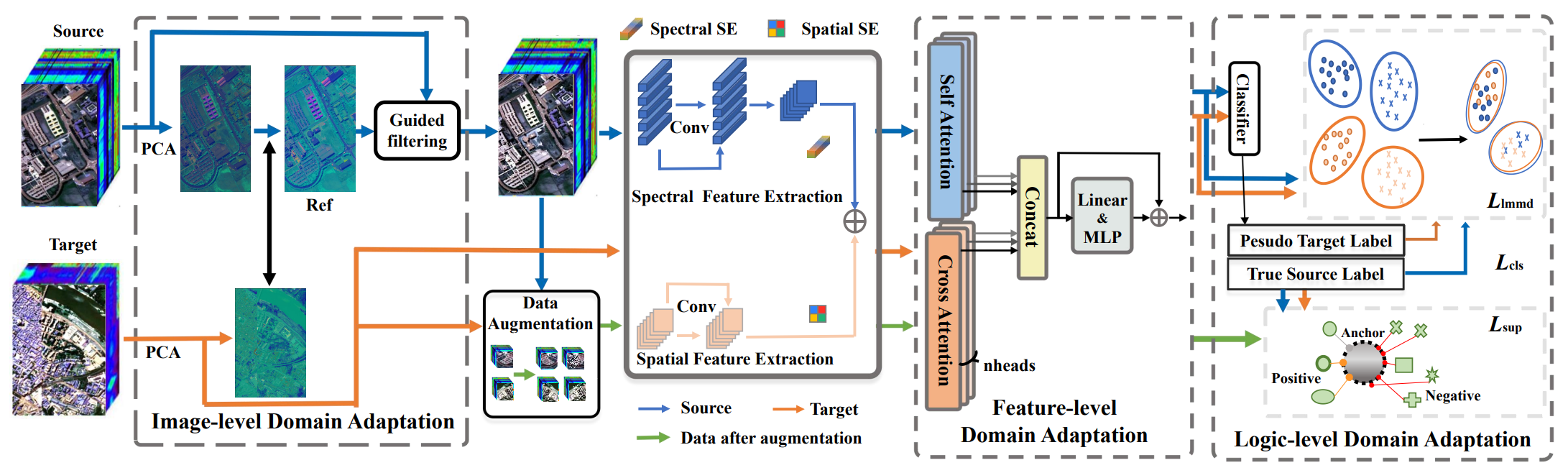

Recently, cross-scene hyperspectral image classification (HSIC) has attracted increasing attention, alleviating the dilemma of no labeled samples in the target domain. Although collaborative source and target training has dominated this field, how to train effective feature extractors and overcome the intractable domain gaps remains a challenge. To cope with this issue, we propose a multi-level unsupervised domain adaptation (MLUDA) framework, which comprises image-, feature-, and logic-level alignment between domains to fully investigate the comprehensive spectral-spatial information. Specifically, at the holistic image level, we propose an innovative domain adaptation method named GuidePGC based on classic image matching techniques and the guided filter. The adaptation results are physically explainable with intuitive visual observations. Regarding the feature level, we design a multi-branch cross attention structure (MBCA) specifically for HSIC, which enhances the interaction between the features from the source and target domains through dot-product attention. Finally, at the logic level, we adopt a supervised contrastive learning (SCL) approach that incorporates a pseudo-label strategy and local maximum mean discrepancy loss, leading to increased inter-class distance across diverse domains and further improving the classification performance. Experimental results on three benchmark cross-scene datasets demonstrate that our proposed method consistently outperforms the compared approaches.

Architecture of MLUDA

Results

Following results tested under NVIDIA 4090Ti

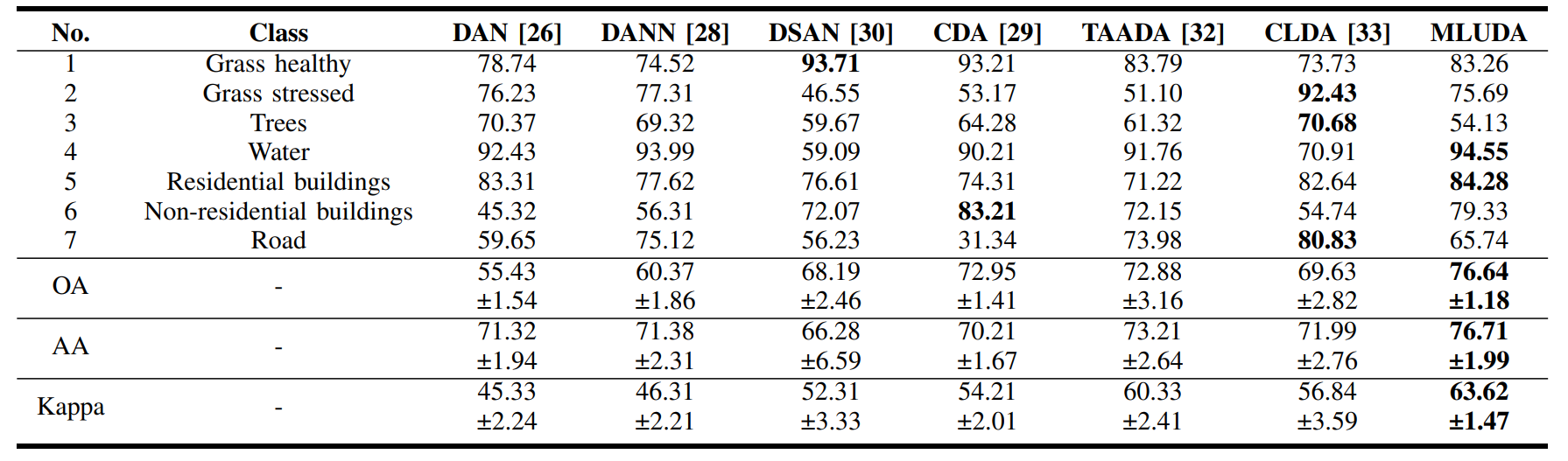

Results on Houston Dataset

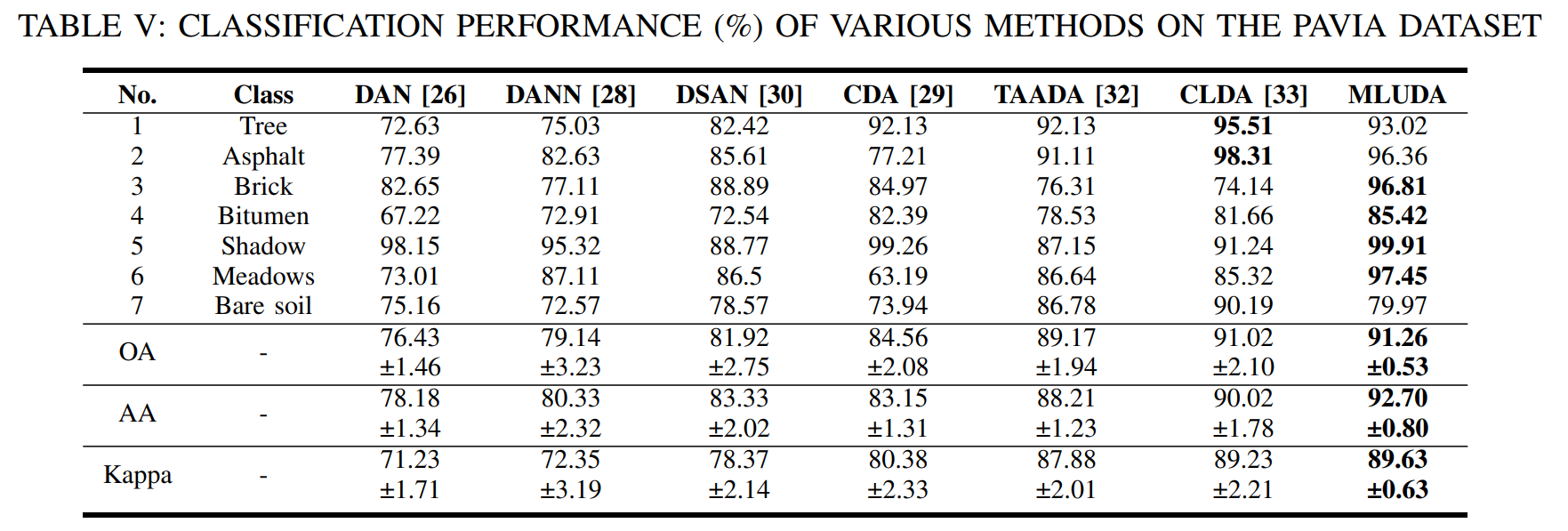

Results on PAVIA Dataset

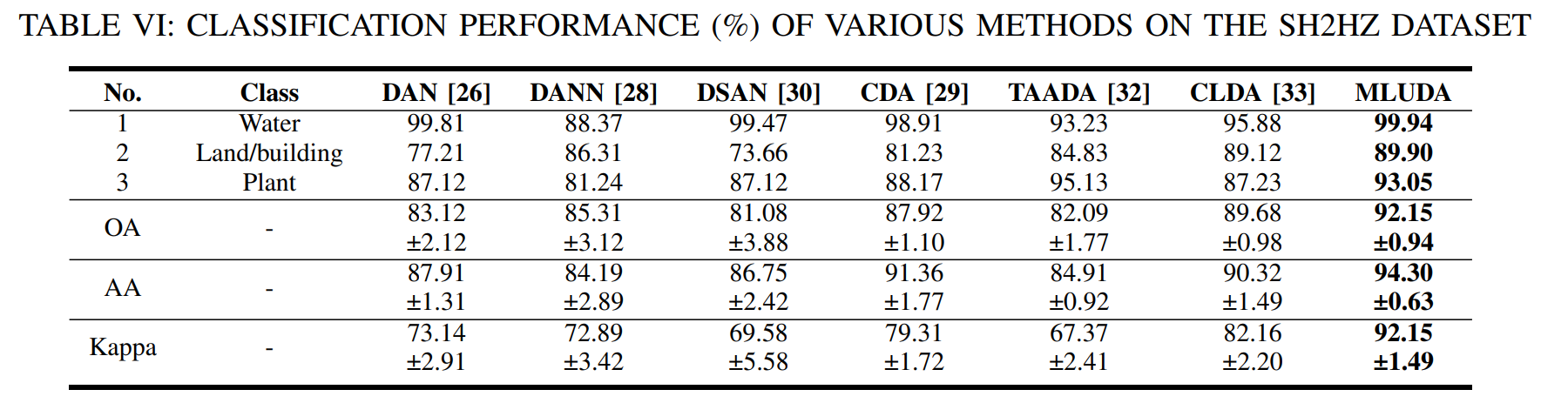

Results on SH2HZ Dataset

If you find our work is helpful, please cite

@ARTICLE{10543066,

author={Cai, Mingshuo and Xi, Bobo and Li, Jiaojiao and Feng, Shou and Li, Yunsong and Li, Zan and Chanussot, Jocelyn},

journal={IEEE Transactions on Geoscience and Remote Sensing},

title={Mind the Gap: Multi-Level Unsupervised Domain Adaptation for Cross-scene Hyperspectral Image Classification},

year={2024},

volume={},

number={},

pages={1-1},

keywords={Feature extraction;Image color analysis;Convolutional neural networks;Training;Task analysis;Visualization;Hyperspectral imaging;Cross-scene;domain adaptation;guided filter;cross attention;supervised contrastive learning},

doi={10.1109/TGRS.2024.3407952}}

If you have any questions, feel free to contact me via caimingshoo🥳google😲com